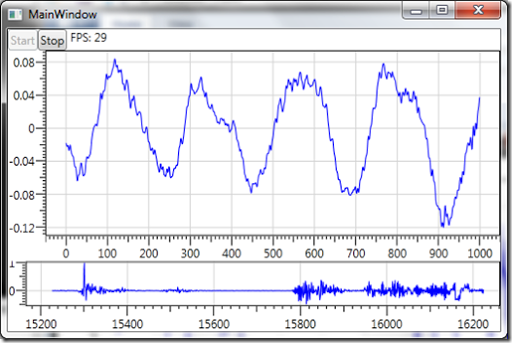

This is the first in a series of blog posts about visualising sound, in a similar way to an oscilloscope. The geek in me thought it’d be a fun thing to do, as well investigate different technological approaches to implementing the app (interesting as it’s a real-time performant app).

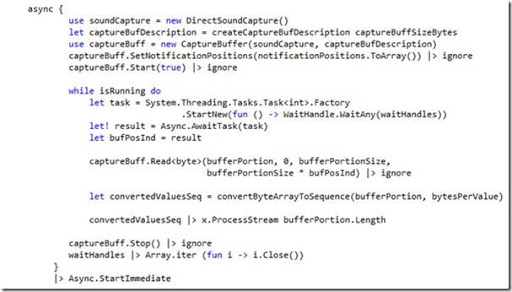

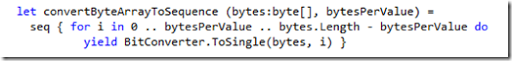

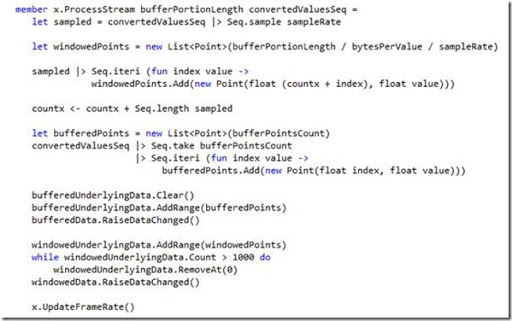

The nastiest bit of the code in ProcessStream is probably towards the end, where the windowedUnderlyingData is manipulated to ensure that the window only contains 1000 samples. It would be nice to do this in a non-imperative way, using Seq.windowed, but the problem is, is that the sequence we’re operating on is only the result of one buffer read operation, whereas windows etc. should operate over the whole data stream, and the sequences can’t be combined into a single sequence using yield! as they’re all generated asynchronously. Similarly, the buffer takes non-overlapping windows over the input sequence, and without taking a buffer over the whole stream, it may miss samples off the end of the sequence portions. Tomas Petricek has written a library, AsyncSeq, http://tomasp.net/blog/async-sequences.aspx which I may investigate in a later post.

The alternative to this would be to implement mailbox processors, having different agents for the buffering, windowing and sampling operations. I did start investigating this, but didn’t feel happy with them being pull-based (they don’t return data unless asked). I could have set them up to act more like a dataflow network, but it does seem to go against their intended use of managing state between different threads. I may revisit this in a future blog post.

I feel that even though F#’s does have nice features which helped to quickly implement the app, RX would probably be a better fit. I guess I won’t know until I implement it in RX and compare the differences.